When we implement backpropagation there is a test called Gradient Checking that helps to make sure that the implementation of backpropagation is correct.

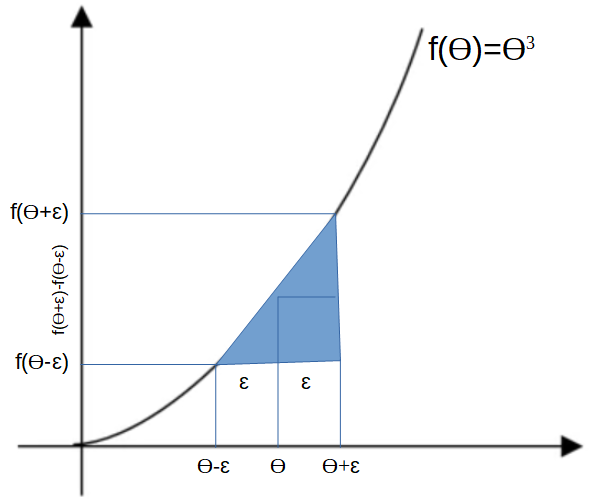

Looking at the figure above we can get much better estimate of gradient if we use a larger approximation of the derivative using a double triangle. The hiight of the triagle in the figure can be seen as follow:

\[ \begin{array}{l}{\frac{f(\theta+\epsilon)-f(\theta-\epsilon)}{2 \varepsilon} \approx g(\theta)} \\ {\frac{(1.01)^{3}-(0.99)^{3}}{2(0.01)}=3.0001} \\ {g(\theta)=3 \theta^{2}=3}\end{array} \] From the calculation above, we havean approximation error of 0.0001 extremely colose to 3. This method is called Two Side Approxicamtion of epsilon. It is important to remeber that: 1 - this method is not used during the training of the neural network but only to debug 2 - when we have the theta function very far from the real function, remember to use regularization 3 - it doesn’t work with dropout, because in this case the cost function J is very difficult to compute 4 - run at random initialization: it is not impossible that the implementation of gradient descent is correct when weights are close to zero.

Reference: coursera deep neural network course